Trusted by enterprises and innovation-driven organizations worldwide

.svg)

.svg)

Enterprise AI Blueprints Designed for Real-World Impact

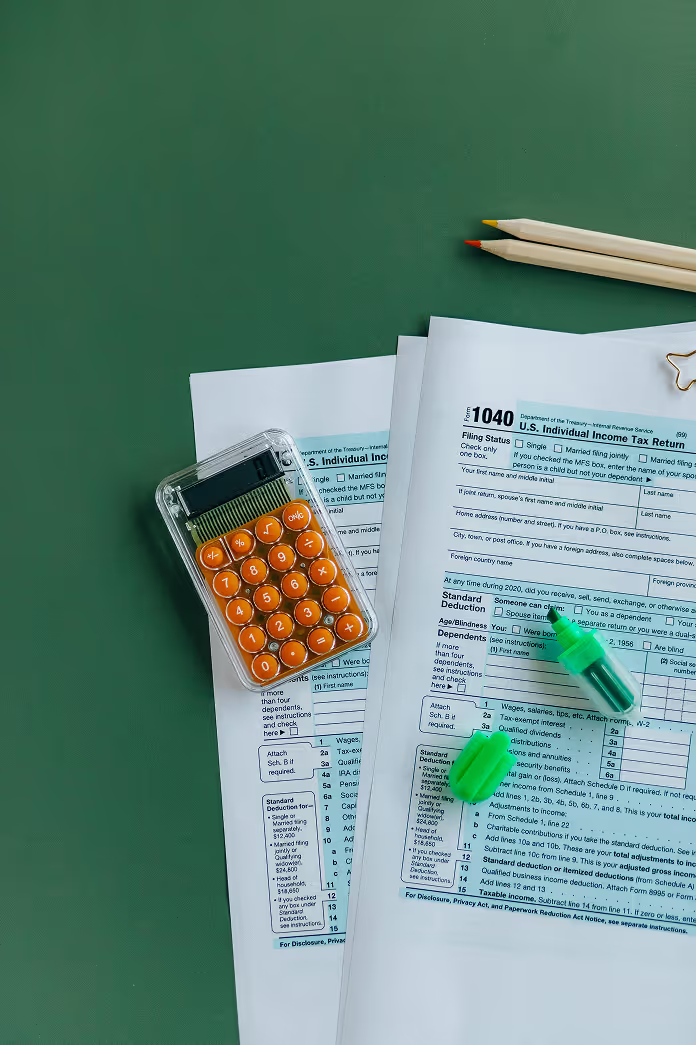

Finance

Constructions

QueriLynx

An unified, multi-agent platform that lets users explore data from various sources using no code.

Education

Virtual Teaching Assistant

A RAG-native AI copilot that streamlines teaching tasks, personalizes learning, and enhances student engagement in higher education.

Finance

Insurance

DocMind

An AI-driven document assistant that streamlines workflows through intelligent sorting, key field extraction, and deep document analysis.

Platforms That Power Our Solutions

How Enterprises Operationalize AI. Confidently.

Explore What's New at DKube

Got questions?

We’ve got answers

What does DKube deliver?

How fast can we go from idea to production?

What is the engagement model?

Do you support post-deployment operations?

How do we collaborate during delivery?

How do we get started with DKube?

.svg)

.jpg)